Tolulope Akinmoju

I love to analyze everything data

View My LinkedIn Profile

View My Tableau Profile

Nashville Housing- A Data Cleaning Project in SQL

Introduction

My aim for this project is to clean and make the dataset ready for analysis. Real world data are often messy data and insights drawn from a messy or questionable data can lead to poor business decisions. This singular action can draw a business down the wrong path, and it can also reduce business productivity. Safe to say that insights from any data are only as good as the quality of the data, no wonder research have shown that about 80% of an analyst’s time goes to data cleansing and preparation. Data cleaning is performed between data collection and the data analyses.

Problem description

What exactly do you look out for in a data? Some of the common tasks to do when you get a data include but not limited to:

• Remove irrelevant data

• Remove duplicate data

• Fix structural errors

• Do type conversion

• Handle missing data

• Deal with outliers

• Standardize/Normalize data

• Validate data

Data

The dataset used for this Data Cleaning project is a 19 column table for Nashville and it can be found [here]

Analyses

I started out by checking to get an overview of the data

My first task was to standardize the ‘SaleDate’ column. The current format is in YYYY-MM-DD HH:MM:SS but since the value of HH:MM:SS are all zeros in the original data, I thought it will be okay to remove the HH:MM:SS. To do this I used the CONVERT statement

Another structural error I thought to fix was to change Y and N to YES and NO in “Sold as Vacant” column. The initial column shows four different categorical values (Y, N, Yes, No) instead of two categories.

To format this to give just two categories, The conditional statement CASE was used.

Next task was to populate the missing PropertyAddress data

From the output above we can see that the PropertyAddress column has a lot of null values.

If we take a closer look the ParcelID column (ParcelID 44/45, 61/62), we will realize that when the ParcelID are the same, the PropertyAddress are also the same. It is safe to use the ParcelID as a reference for populating the missing value in the PropertyAddress column as shown below.

To do this, the query below was used to populate the missing PropertyAddress using a SELFJOIN on the table such that If a.ParcelID = b.ParcelID, then populate missing a.PropertyAddress to be = b.PropertyAddress provided a.UniqueID <>b.UniqueID.

It works, rows 8 and 9 shows the update was successful.

Then I updated the PropertyAddress with the query below

From the last task I saw that the PropertyAddress isn’t formatted correctly, I decided to parse the long-formatted by breaking it down into individual column Address, City. To do this, I split the PropertyAddress such that a column has the address while the other has the city.

In the same vein, I decided to fix the OwnerAddress as it was formatted to include Address, City and State in a single Column. I opted to split entire address to contain individual columns.

I also wanted to remove duplicate rows from my table and to do this I will use CTE and window ranking function Row_Number().

The last column on the output table shows the duplicate rows, now to do the actual delete from the table, I used the query below.

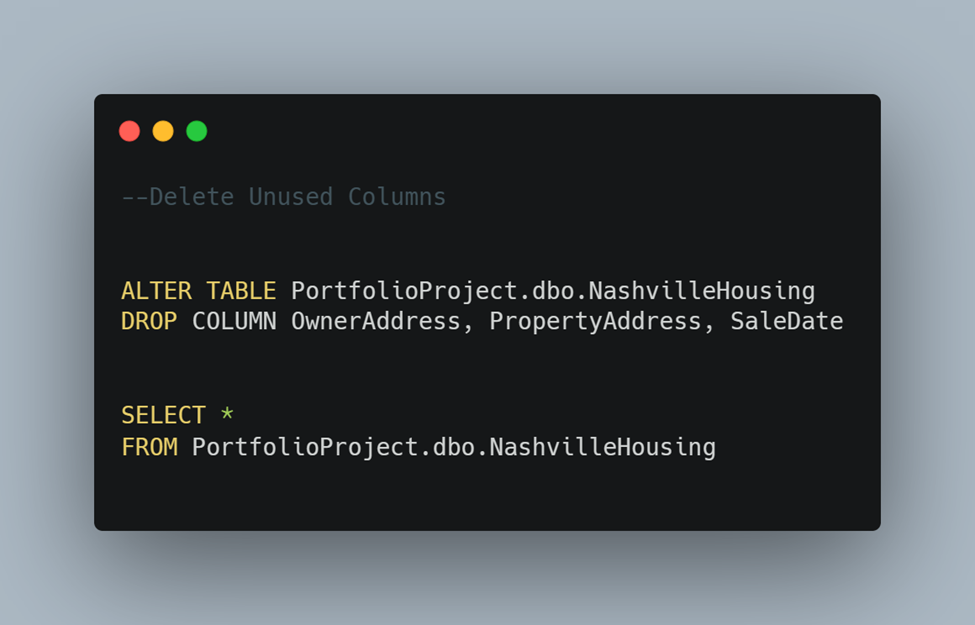

Finally, I removed the unused column from the table using the DROP statement. I did this manly because I had created a better format for the columns during my analysis and as such, I have no need for them.

Please note that it is not a good practice to delete columns from the table especially if you are working with raw data from the database. If you must do this, please create a backup of your original data because once it is deleted, the data is gone.

And it’s a wrap. The dataset is cleaned and set for further analysis.

Recap

In this project I standardized date format, fixed structural errors, populated missing values, split long-formatted address column, removed duplicates, and lastly deleted unused columns.

All my codes are linked here in my [github].

Thank you for following me thus far and I hope it was a good read. Please do well to follow my data progress [here] and you can also connect with me on [LinkedIn]

Reference

Guided Project- [Alex the Analyst]